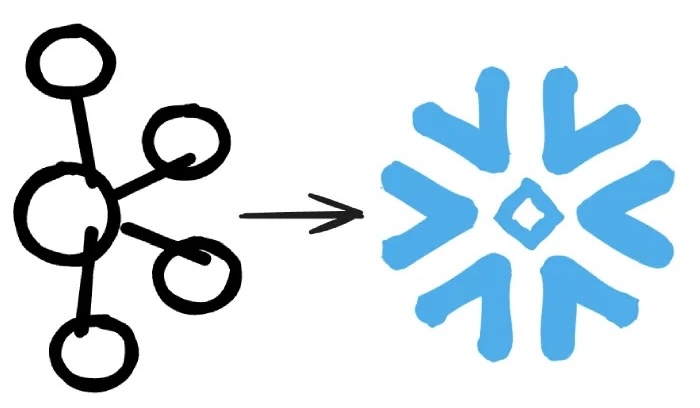

Every year, zettabytes of data are transferred over the Internet. Managing, processing, and storing the data can be a really complex task and requires cutting-edge tools. Many tools can be used, but two technologies that are almost essential to every modern data pipeline: Kafka and Snowflake.

If you are planning to stream a large amount of data, Kafka is usually the best choice. Scalability, reliability, low latency and popularity are reasons why Kafka stands out. Kafka is by far the most popular event streaming platform and a go-to for many cases when it comes to streaming data.

Since you need to process and store the data somewhere, Snowflake is one of the top contenders for that. If you are wondering why Snowflake is so popular, look at the user satisfaction — it’s over the roof. Snowflake took most of the drawbacks and oversights that other data storage solutions had and addressed it. Probably the most important feature is having the storage and compute separately scaled.

What is Kafka Connect, and why did we decide to use it?

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other data systems. It provides streaming integration between Kafka and other data sources or sinks, enabling seamless data movement and transformation. Kafka Connect uses connectors to ingest data into Kafka topics or export data from Kafka topics to external systems.

Kafka Connect is made for use cases like this.

This is just an intro, to see how we used Kafka Connect for consuming and storing Kafka messages in Snowflake, check out the full blog (for free) at: